Dynamic environments are a key challenge in robot mapping

Thus, detecting and segmenting moving objects in sensor data is essential for building consistent maps, making future state predictions, avoiding collisions, and planning. In a recent work, Xieyuanli Chen et al. tackled the problem of detecting moving objects in 3D laser range scans typically mounted on an autonomous car.

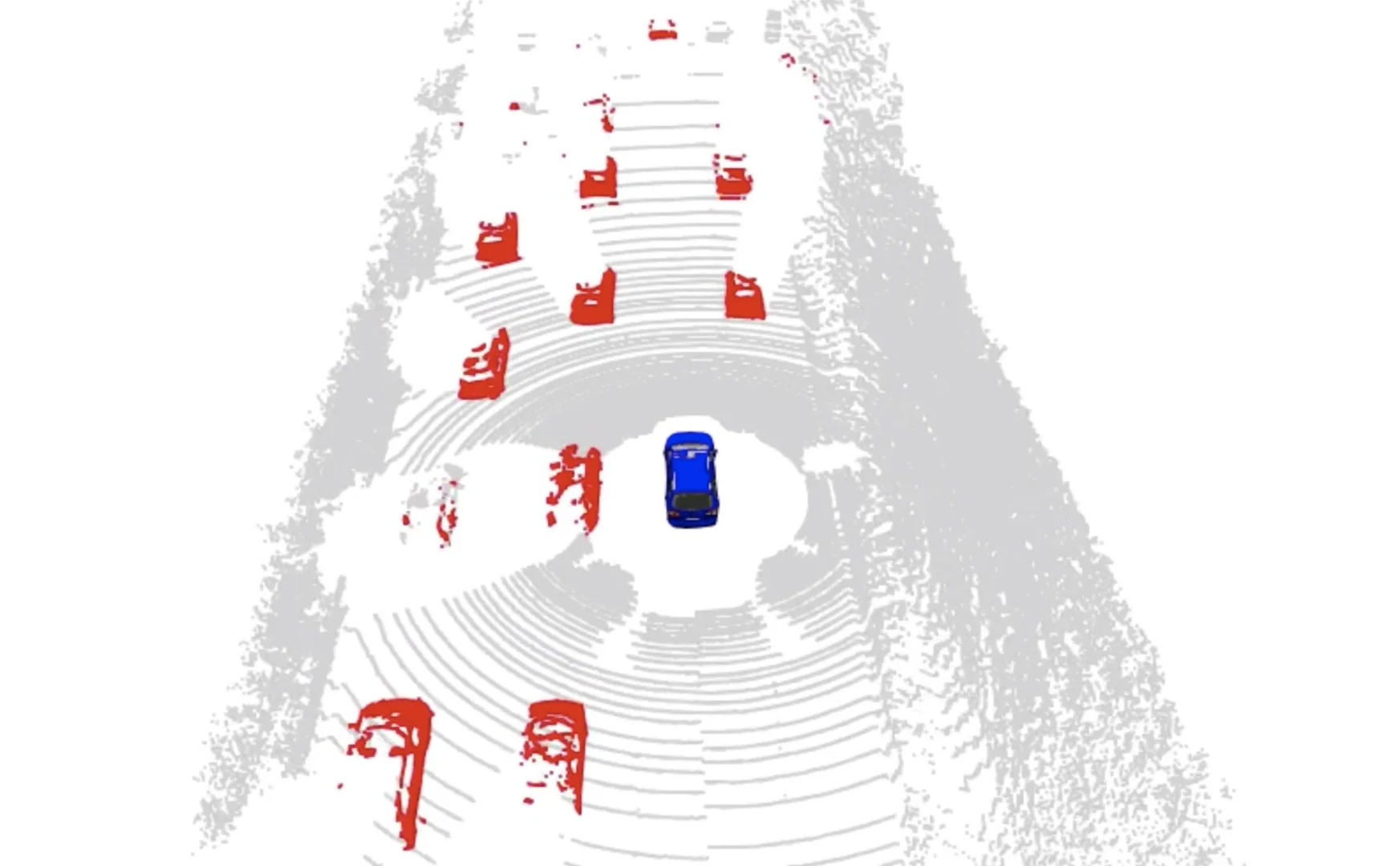

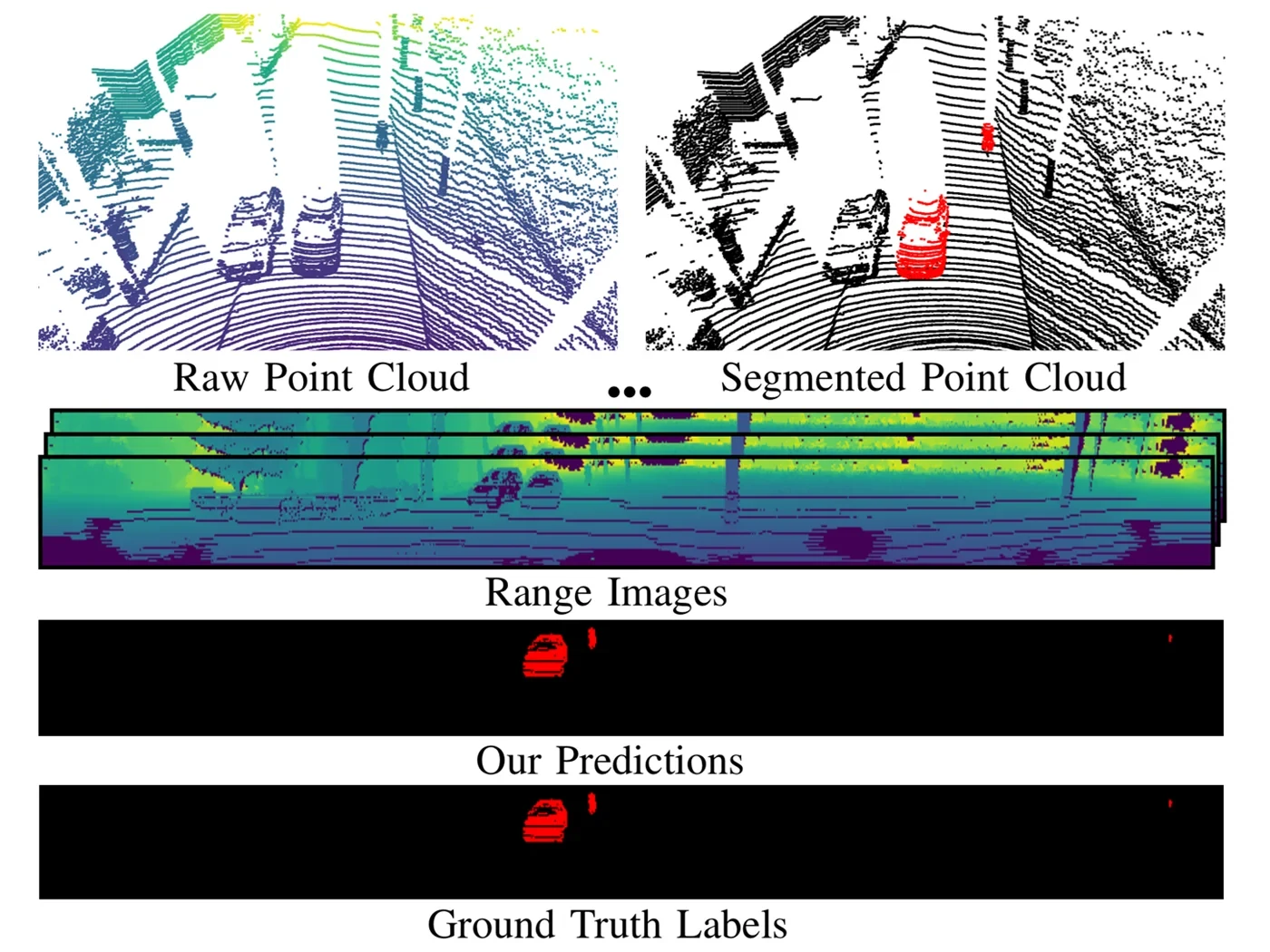

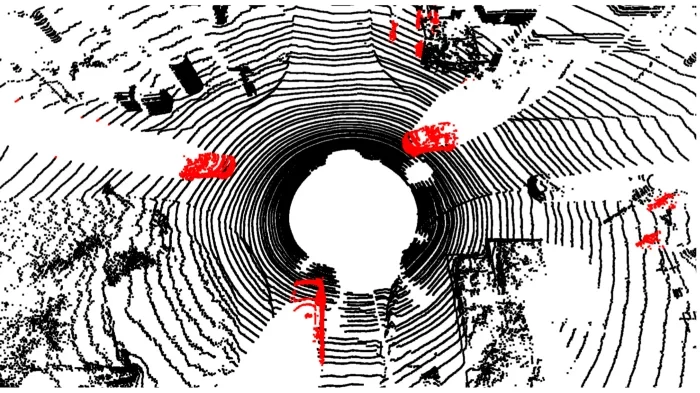

The paper addresses the problem of moving object segmentation from 3D LiDAR scans and proposes a novel approach to LiDAR-only moving object segmentation to provide relevant information for autonomous robots and other vehicles in the scene in real-time. Instead of segmenting a full 3D point cloud semantically, i.e., predicting the semantic classes such as vehicles, pedestrians, roads, the approach segments the scene only into moving and static objects. Thus, objects that are actually moving will be separated from the static scene.

The approach distinguishes also between moving cars vs. parked cars.

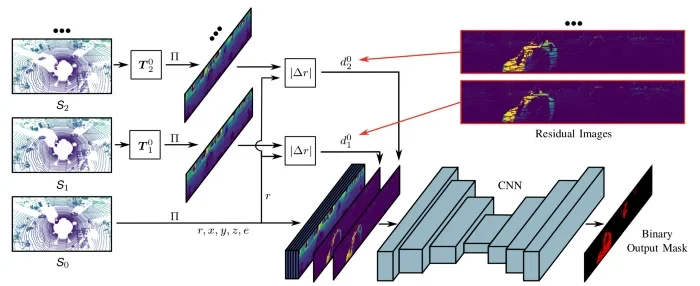

The proposed approach exploits sequential range images from a rotating 3D LiDAR sensor as an intermediate representation combined with a convolutional neural network or CNN. This means the approach does not work on the 3D clouds directly but on an image-like representation.

This has the advantage that a CNN with a 2D input can be used — this is more efficient than a similar approach working on 3D point clouds. To be able to detect motion, the approach actually considers a sequence of such range images. I this way, the approach is efficient and runs at about 20 Hz and thus faster than the typical frame rate of the sensor.

The method combines a short sequence of range images, computes difference images, and uses a convolutional neural network to turn this information into a binary mask of moving vs. non-moving objects.

This new method shows a strong segmentation performance in urban environments such as on KITTI. Besides an in-depth evaluation, the paper also provides a new benchmark for LiDAR-based moving object segmentation building on top of SemanticKITTI. Furthermore, Xieyuanli Chen also provides his code so that everyone can run and test it on own data. You may use it to identify moving objects on the fly or during post-processing to clean your maps by feeding your SLAM algorithm with masked LiDAR range images.

Further reading:

- X. Chen, S. Li, B. Mersch, L. Wiesmann, J. Gall, J. Behley, and C. Stachniss: “Moving Object Segmentation in 3D LiDAR Data: A Learning-based Approach Exploiting Sequential Data,” IEEE Robotics and Automation Letters (RA-L), 2021.

https://www.doi.org/10.1109/LRA.2021.3093567

Source Code:

Benchmark:

Trailer video: