Efficient and fast compression is key for operating in the real world

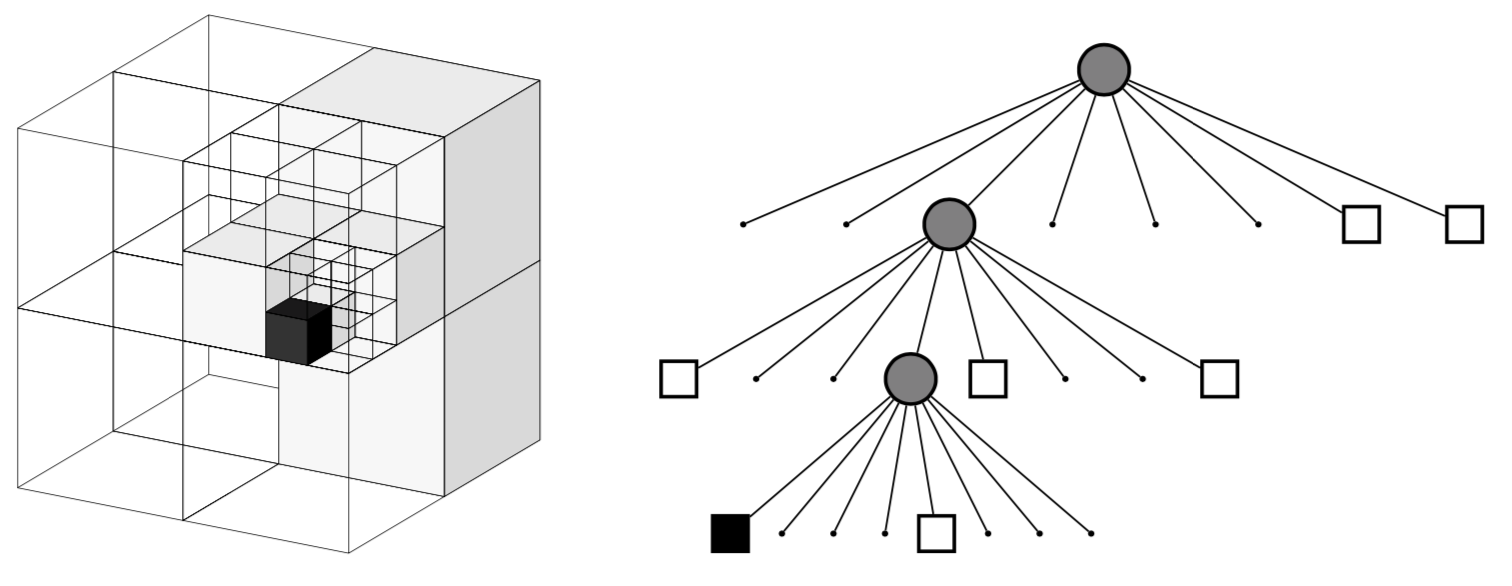

Thus, sensor data and maps information need to be compressed in order to be stored and processed. Finding an efficient representation that allows for compact storage and fast querying is an old topic in robotics, computer graphics, and other disciplines. A popular way is given through octrees, which offer a hierarchical and recursively 3D storage. Techniques such as OctoMap, which use these trees, have been around for years and form the gold standard today.

Machine learning offers new means for compression

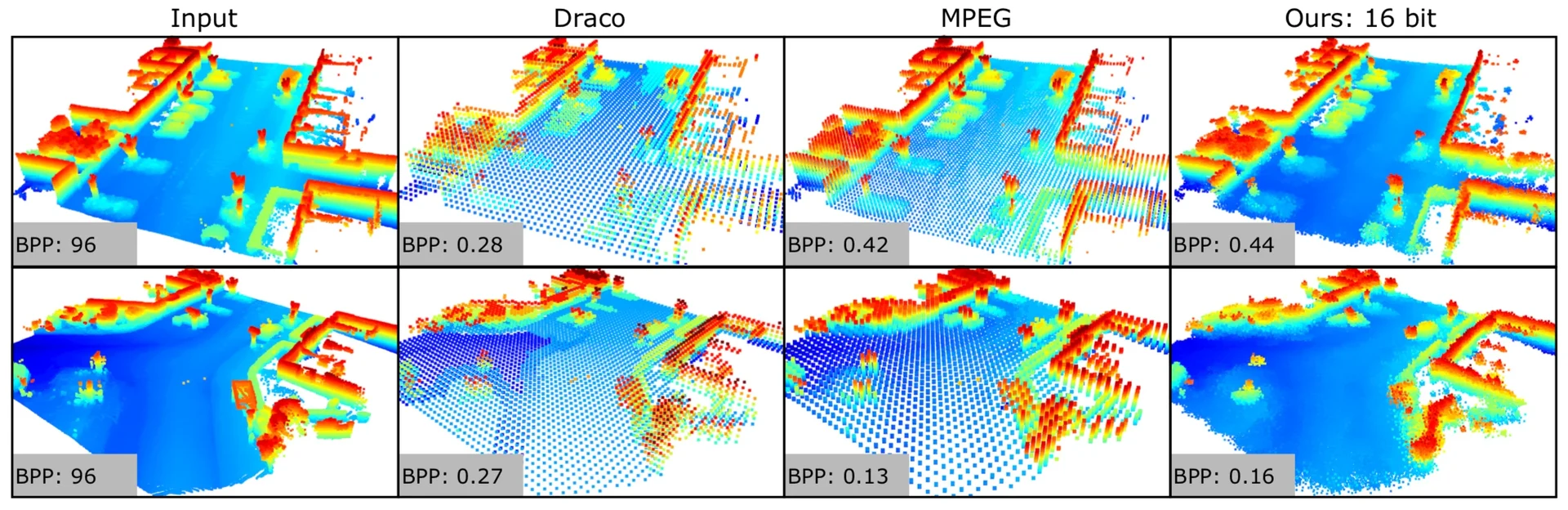

A recent work by Louis Wiesmann et al. proposes a new way of compressing dense 3D point cloud maps using deep neural networks. This method by Wiesmann allows computing compact a scene representation for 3D point cloud data obtained from autonomous vehicles in large environments.

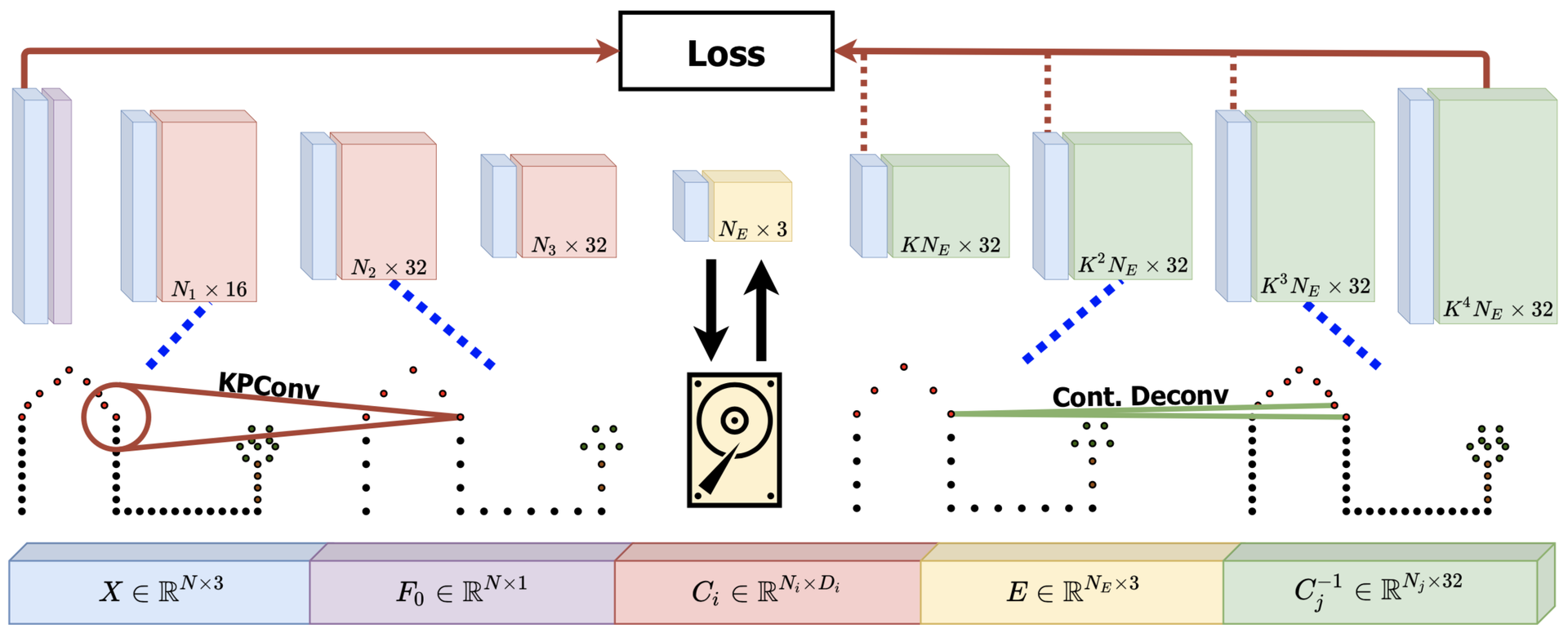

It tackles the problem of compression by learning a set of local feature descriptors from which the point cloud can be reconstructed efficiently and effectively. The paper proposes a novel deep convolutional autoencoder architecture that directly operates on the points themselves so that no voxelization is needed. This means that in contrast to OctoMap or Occupancy Voxel Grids, no discretization of the space needs to be computed, which is a great advantage — no need to commit to a certain resolution beforehand. The work also describes a deconvolution operator to upsample point clouds from the compressed representation, which decomposes the range data at an arbitrary density.

Their paper shows that the learned compression achieves better reconstructions at the same bit rate than other state-of-the-art compression algorithms. It furthermore demonstrates that the approach generalizes well to different LiDAR sensor.

Further reading:

- L. Wiesmann, A. Milioto, X. Chen, C. Stachniss, and J. Behley, “Deep Compression for Dense Point Cloud Maps,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2060–2067, 2021.

https://www.doi.org/10.1109/LRA.2021.3059633

Source Code:

Trailer video: